There were many things to consider, and we may talk about some of those things in the future, but the aspect of penetration testing I want to talk about today is the infrastructure we use to conduct a penetration test.

Note: With a few minor exceptions, the same thought process applies for bug bounty hunting. If that’s more your thing, feel free to sed s/penetration testing/bug bounty hunting/g.

What do we need from our infrastructure?

Penetration testers and bug bounty hunters typically use an environment separate from their BAU environment to conduct their testing. The main reasons for this include:

- The tooling we use for security testing is specialised and very different from the software we use day-to-day.

- We need to isolate the data collected throughout an engagement to protect sensitive information about our clients and targets.

- We need to run scripts and tooling that we would not be comfortable with running in an environment that has access to other sensitive files and resources.

- We want to allocate dedicated computing resources for testing so we are not confined to the computational power of our workstations.

- We need to allow long-running tasks and listeners to operate without interruption caused by our day-to-day movements.

- We need to allocate a static IP address or IP range from which all traffic originates, so our clients can be aware of where testing traffic is coming from and to perform any allow-listing that needs to occur.

These factors largely apply for all types of security testing; however, the way we achieve these needs may differ depending on the type of test. For example, the tools we need for wireless network testing is very different from the tools we need to test a client’s external network footprint.

I specialise in web application security testing, so this post will focus on the infrastructure used for this type of engagement. You may have different needs and approaches if you do other forms of security testing.

What are some limitations of using VMs for our testing environment?

I am all for trying out new tools and techniques to find better ways of doing the work that we do, but before we throw out tried-and-tested approaches, we need to be aware of the strengths and limitations of existing approaches to warrant the research and upskilling that is necessitated by adopting a different approach.

What I mean by this is: Why don’t we just use VMs?

I understand that I am not speaking for all security professionals, but my experience talking with people in infosec and watching what others do online suggests that virtualisation is the most common way we achieve the requirements listed above.

We spin up Kali or another Linux distribution in a VM, and we can either do that locally on our workstation, on a dedicated server on-premises, in a data-centre or in the cloud.

When following this approach, there are a few limitations and considerations that came to mind that made me think it was worthwhile exploring other options:

Maintaining a hardened SOE is a pain

Like any operating system we use, the operating system we use for testing needs to be hardened. One approach to hardening the OS used by each of our security testers is to maintain a single Standard Operating Environment (SOE) so that each of our testers can use a common hardened image.

This is an important but time-intensive process, and every hour spent updating or rebuilding an SOE is time that could be better spent hacking, upskilling or building out tooling.

Standard VMs make custom configuration difficult

On top of the effort that goes into updating maintaining an SOE, there is all the effort that is spent by the testing team to start using the new SOE. There is significantly more time that is lost in this stage, as the effort is multiplied for each tester that needs to go through this process, rather than the individual or small team of people in charge of maintaining the SOE.

The issue here is that, while we may follow similar base methodologies, each tester has their own set of tools and configurations that they feel most comfortable using to be productive and get the job done. This means that each time a new SOE is pushed out, the tester needs to download the image, set up a new VM, then download and set up all their favourite tooling all over again.

A seasoned security professional might script this process or utilise some build tooling to make it a little faster, but I know I find this a little dull and laborious.

New technologies are available that could be viable alternatives

There are a number of technologies that have become widely adopted by software developers and operations teams that may be suitable for what we need. More organisations are moving their computing into the cloud, and the pervasiveness of DevOps and web technologies in the software development space has bought Docker, Kubernetes, serverless computing, CI/CD pipelines and many other technologies into widespread adoption.

At this point, it seems sensible to reevaluate whether VMs are the optimal way to manage penetration testing infrastructure.

Are Docker containers a viable alternative?

There are a couple of specific features of Docker containers which piqued my interest and drove me to try using them in practice for bug bounty hunting and penetration testing to see if they would be a good fit.

Designed to be spun up and torn down

The lifecycle of a penetration test means that we regularly spin up some infrastructure for a given engagement, we carry out the work, then we reset or throw away our test environment ready to start fresh for the next engagement.

This lifecycle aligns so well with the life of a container; it was this aspect that first made me consider using containers for penetration testing. The ability to spin up and throw away a dedicated per-engagement testing environment was an alluring approach.

Scripted infrastructure management

Further to this, Docker containers are programmatically managed using Infrastructure as Code (IaC) definitions such as Kubernetes, Terraform and CloudFormation, all managed by scripted builds and releases in the form of CI / CD pipelines by developers the world over.

We could apply a similar approach to manage our testing infrastructure in an automatic, scripted way.

Computer, I have a new penetration test engagement. Please spin up a new environment, that is hardened to our baseline standard and includes all the networking, storage and compute power that I need.

Sign me up.

Simplified hardening and patch management

Touching on that last point, one of the strengths of containerisation and IaC as a whole is that we can repeatably and reliably apply the same hardening to every container created from the base image. We just apply our hardening once to our container definition which effectively acts as our SOE.

New kernel patches applied to our base image? Need to update one of the tools we use in our primary workflow? Patch it once in the Dockerfile, it’ll be in every container created thereon.

Natively supports custom configuration

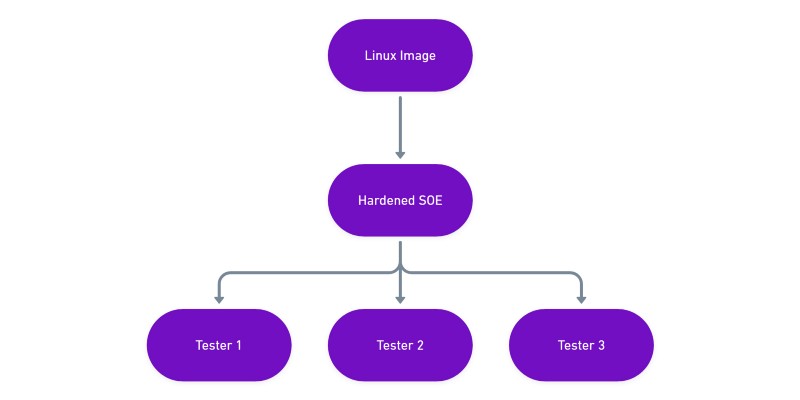

Docker also natively supports a way for individual testers to build their own customised image based on a hardened SOE image. With Docker, you can create an image that uses another image as its base. This means we can create a layered container definition, such as:

- We start with a base image, which might be a lightweight image such as Alpine, a more familiar Ubuntu image, or we can even use the official Kali Docker image.

- Using this image as a base, we create a base image (or a collection of images) that is used throughout the team. This image contains all our common tooling, configuration and hardening we’d expect from an SOE.

- Finally, each tester can create a personalised image that’s based on the common hardened SOE image.

This way we can get the best of both worlds between having a common hardened SOE and customised environment per tester, all of which can be automatically built each time it’s updated.

Finally, our testing environment architecture natively settles the age-old arguments: Bash or ZSH? Python or Ruby? Vim or Nano? It’s up to you, you can choose.

Limitations of Docker containers

These factors together build a fairly compelling case to at least trial using containers as the basis for your penetration testing environment, but it’s worthwhile noting that there are some limitations and things you will need to consider when designing your containerised environment.

Containers are ephemeral, so don’t lose your files

Docker containers are ephemeral by design, so what happens to your files when you throw away your container? They are lost to the ether, unless of course you design your architecture correctly.

Like any Linux machine, Docker lets you mount a drive to your container when you create it, and the drive will remain after the container is destroyed. You can mount a directory on the host running Docker, or more likely, you will want to mount the container to a network file share. This could be your on-premise network drive, an S3 bucket if you’re running in AWS, or a storage account if you’re running in Azure.

An additional layer of networking

Docker manages a layer of networking between the host that is running Docker and the containers within the Docker instance. While this can all be configured to expose all the ports and services you need, it is an additional layer of networking that you will need to consider.

If you’re in the middle of an engagement and decide to run a listener on a given port, you will need to configure Docker to correctly route traffic to that port. This is entirely manageable, but will take some consideration and may be dependent on how you host your Docker environment.

Primarily a terminal interface

Depending on how you run your virtual machines, you may be used to logging into a GUI via RDP or VNC. Docker containers don’t typically run these services, and while there are options to run GUI applications, you’ll most likely be interacting with the container via a shell.

For many, this will not be an issue, since most of the tools we use are run from the command line. You can still run your browser and BurpSuite locally and use a SOCKS proxy to route traffic through your testing environment, but this is something worthwhile considering if you’re used to running a GUI.

Summary

In short, Docker containers are a strong alternative to VMs for bug bounty hunting and penetration testing, though the specifics of the tools you use and the environment you run them is may adjust the viability of containers.

I have been using containers for web application testing for a while now, and while I did experience some growing pains in getting things set up in a way that works for our team, it is ultimately achievable. I hope to share some of these successes (and failures) in future blog posts.

I’ll leave you with a question: how do you manage your test environment and your toolkit? What have you revised about your testing flow that allowed you to provide better value to your clients? Let me know in the comments below, or hit me up on Twitter at @JakobTheDev.

Thanks for reading!

If you enjoyed this post, follow on Twitter or Mastodon for more content. If you have any feedback or suggestions, leave it in the comments below and I’ll do my best to get back to you.